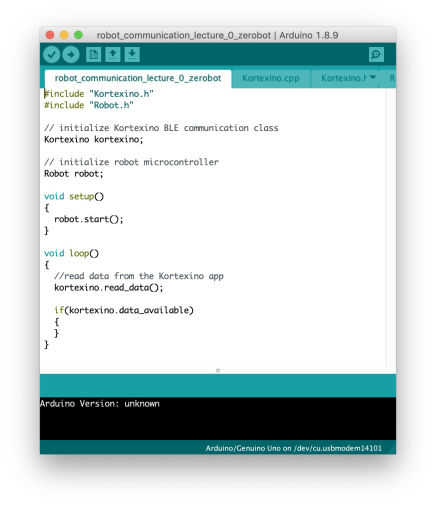

Download the zerobot code and library here. Open it in the Arduino IDE and inspect the file main zerobot file shown in the image to the left. Don't worry about the other files - these only contain the code for the bluetooth and robot communication, which will always be the same.

The zerobot file shown to the left however does not do much. It just starts the Bluetooth communication and initializes the control of the motors built into the Kortexino bot.

In the following challenges, you are tasked to extend the zerobot file to generate more interesting robot behaviors.